Training Supervised AI Models Without Labeled Data

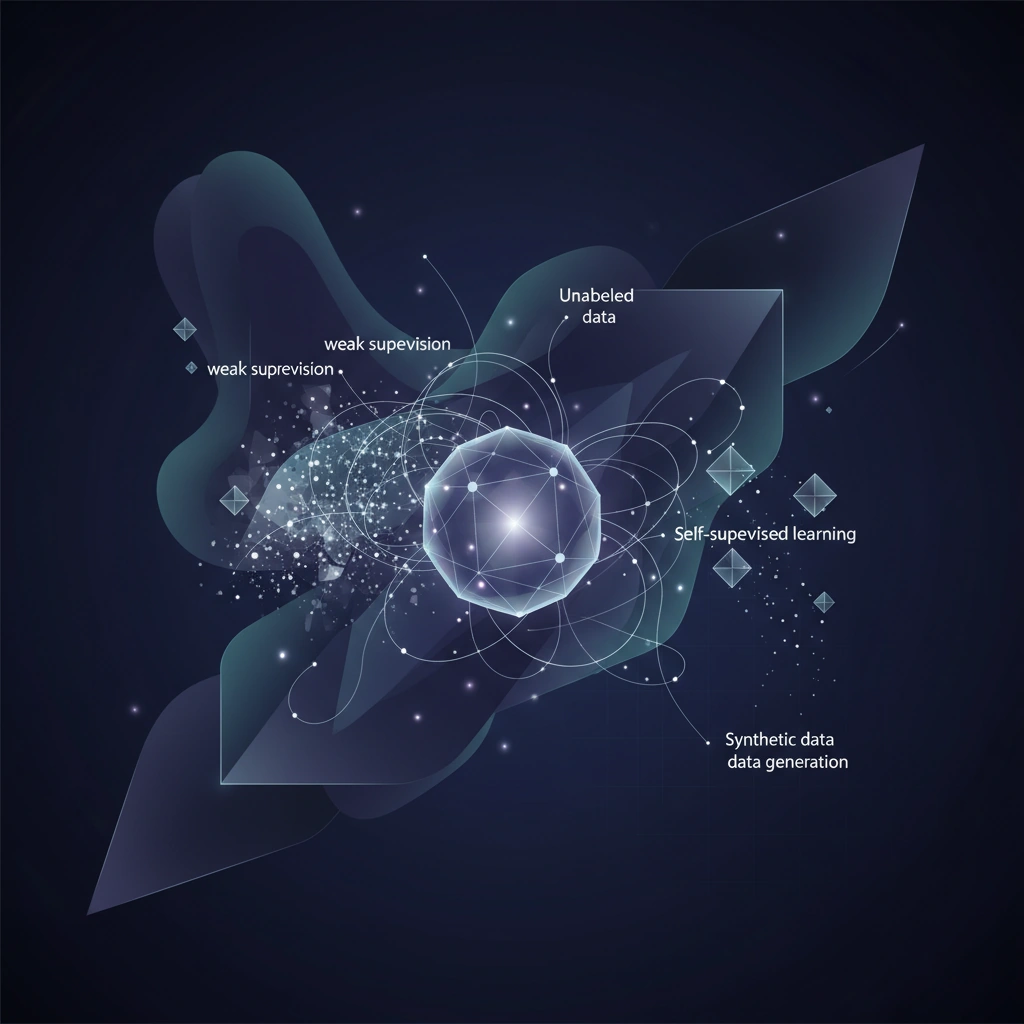

Explore practical techniques for building supervised machine learning models when annotated training data is unavailable, including weak supervision, self-supervised learning, and synthetic data generation approaches.

The scarcity of labeled training data represents one of the most significant bottlenecks in developing supervised machine learning models. While supervised learning has driven breakthroughs across computer vision, natural language processing, and synthetic media generation, the process of manually annotating datasets remains expensive, time-consuming, and often impractical at scale.

Recent advances in machine learning methodology offer multiple pathways for building effective supervised models even when high-quality labeled data is limited or unavailable. These approaches range from leveraging noisy labels to generating synthetic training examples, each with distinct advantages for different problem domains.

Weak Supervision: Learning from Noisy Labels

Weak supervision frameworks enable model training using imperfect or programmatically generated labels rather than precise human annotations. This approach typically involves defining labeling functions—simple rules or heuristics that automatically assign labels to unlabeled data, even if those labels contain errors.

Tools like Snorkel have popularized this methodology by providing frameworks that aggregate multiple weak labeling sources, each potentially noisy, into probabilistic training labels. The system models the accuracy and correlation patterns of different labeling functions, producing training labels that are often sufficient for building competitive models.

For video deepfake detection applications, weak supervision might involve labeling functions based on metadata signals (upload source, compression artifacts, frame consistency) rather than expensive frame-by-frame human review. The aggregate of these imperfect signals can generate training sets large enough to learn meaningful patterns.

Self-Supervised Learning Approaches

Self-supervised learning creates supervisory signals directly from the data structure itself, eliminating the need for external annotations. The model learns by solving pretext tasks that require understanding underlying data patterns.

Common pretext tasks include masked prediction (predicting hidden portions of inputs), contrastive learning (distinguishing similar from dissimilar examples), and next-frame prediction in video sequences. BERT's masked language modeling and SimCLR's contrastive visual learning exemplify this approach.

For synthetic media applications, self-supervised models can learn representations by predicting temporal consistency in video frames, reconstructing masked image regions, or identifying correspondence between audio and visual streams. These learned representations then serve as foundations for downstream supervised tasks with minimal labeled data through transfer learning.

Semi-Supervised Learning Strategies

Semi-supervised methods leverage small amounts of labeled data alongside large unlabeled datasets. Pseudo-labeling represents a straightforward approach: train an initial model on available labeled examples, use it to predict labels for unlabeled data, then retrain on the combined dataset with high-confidence predictions.

More sophisticated techniques include consistency regularization, which enforces that models produce similar predictions for perturbed versions of the same input. MixMatch and FixMatch combine pseudo-labeling with consistency regularization, achieving strong performance with remarkably few labeled examples.

Co-training offers another strategy where multiple models with different architectures or views of the data teach each other by labeling examples for mutual training. This approach proves particularly effective when different feature representations provide complementary information.

Synthetic Data Generation

Generating artificial training data with automatic labels provides another solution to annotation scarcity. Graphics engines can render synthetic images with perfect ground truth labels for object detection, semantic segmentation, or pose estimation tasks.

Generative models like GANs and diffusion models can create realistic training examples with known labels. For deepfake detection, researchers generate synthetic manipulated videos with controlled artifacts, providing labeled training data that would be expensive to create through manual manipulation and annotation.

The challenge lies in bridging the domain gap between synthetic and real data. Techniques like domain randomization, adding realistic noise, and domain adaptation help models trained on synthetic data generalize to real-world inputs.

Active Learning for Strategic Annotation

When some annotation budget exists, active learning strategically selects which examples humans should label to maximize model improvement. The model identifies uncertain or informative examples that would most benefit training if labeled, prioritizing annotation effort where it matters most.

Query strategies include uncertainty sampling (labeling examples where the model is least confident), diversity sampling (ensuring labeled data covers the input space), and expected model change (selecting examples that would most alter model parameters).

Implementation Considerations

Successfully building supervised models without labeled data requires careful consideration of the problem domain. Data quality matters more than quantity—noisy weak labels or poorly generated synthetic data can harm model performance. Validation sets with clean labels remain essential for reliable evaluation.

Combining multiple approaches often yields the best results. Starting with self-supervised pre-training, adding weak supervision for task-specific signals, and fine-tuning with limited high-quality labels through active learning creates a robust training pipeline that maximizes available resources.

As AI systems become increasingly central to video authentication, content moderation, and synthetic media detection, the ability to train effective models without massive labeled datasets becomes critical. These methodologies democratize AI development, enabling practitioners to build sophisticated systems even with limited annotation resources.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.