VAEs Explained: The Hidden Engine Powering Modern AI Video

Variational Autoencoders compress reality into mathematical latent spaces, enabling everything from Stable Diffusion to AI video generation. Here's how the Bayesian math actually works.

If you've used Stable Diffusion, watched an AI-generated video, or marveled at how deepfake technology can synthesize realistic faces, you've witnessed Variational Autoencoders (VAEs) at work. These elegant mathematical constructs sit at the heart of modern generative AI, yet their fundamental principles remain poorly understood outside academic circles.

What Makes VAEs Unreasonably Effective?

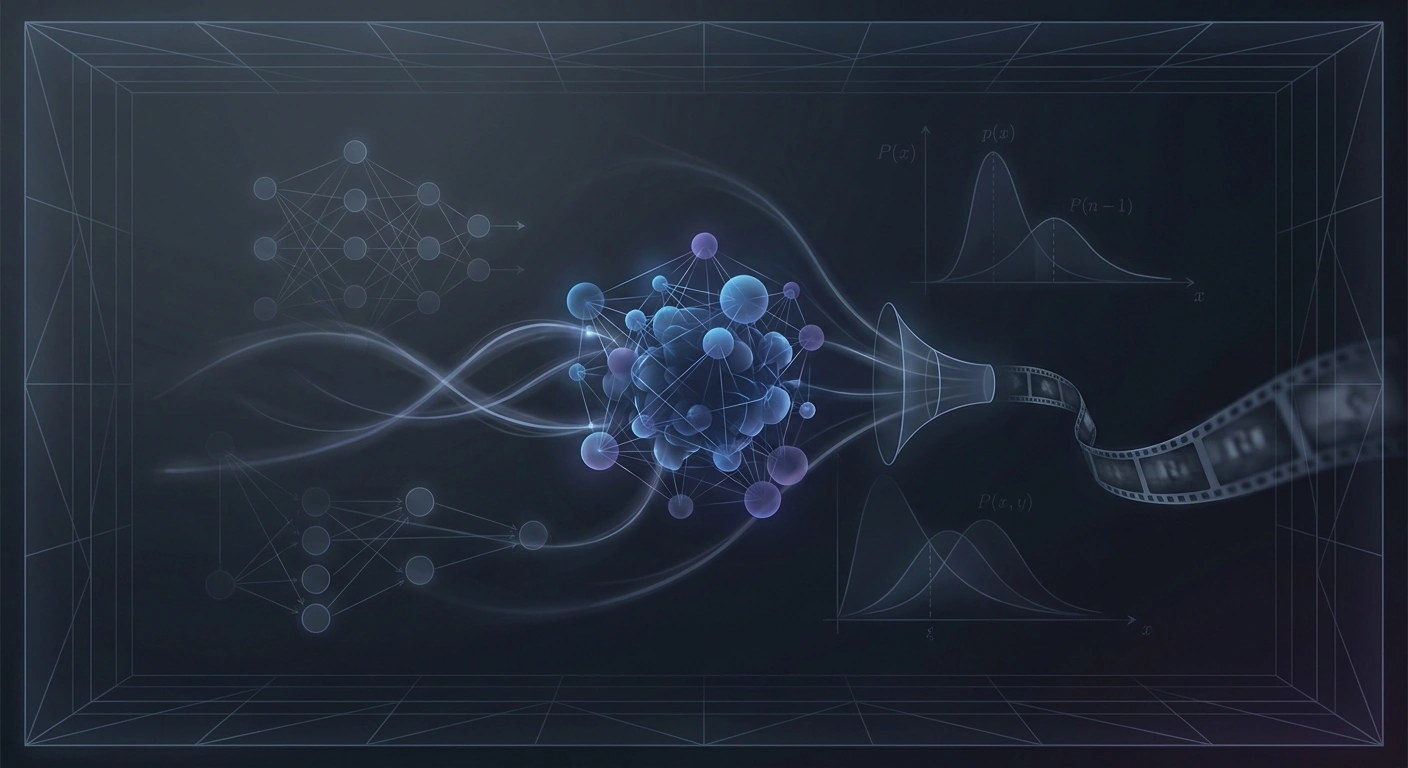

The genius of VAEs lies in their ability to compress high-dimensional data—images, audio, video frames—into compact, continuous latent representations that can be manipulated, interpolated, and decoded back into realistic outputs. Unlike traditional autoencoders that simply memorize compression patterns, VAEs learn probabilistic distributions over their latent spaces.

This distinction matters enormously for generative applications. When you ask Stable Diffusion to generate an image, the model works in a latent space defined by a VAE. When video synthesis systems like those from Runway or Pika generate frames, VAE architectures enable the compression and decompression that makes real-time generation computationally feasible.

The Bayesian Foundation

VAEs derive their power from Bayesian probability theory. The core insight is treating the encoding process as inference—estimating a probability distribution over possible latent representations given an input—rather than deterministic mapping.

Mathematically, this involves approximating an intractable posterior distribution p(z|x) with a tractable encoder distribution q(z|x). The encoder doesn't output a single point in latent space; it outputs the parameters of a probability distribution, typically the mean and variance of a Gaussian.

This probabilistic framework enables the "reparameterization trick"—sampling from the latent distribution in a way that allows gradients to flow during backpropagation. Without this innovation, training VAEs would be computationally prohibitive.

The ELBO: Balancing Reconstruction and Regularization

VAE training optimizes the Evidence Lower Bound (ELBO), which elegantly balances two competing objectives:

Reconstruction Loss: The decoder should faithfully reconstruct inputs from their latent representations. For images, this typically means pixel-wise similarity; for more sophisticated applications, perceptual losses compare high-level features.

KL Divergence: The encoder's latent distributions should remain close to a prior distribution (usually standard Gaussian). This regularization prevents the model from simply memorizing training data and ensures the latent space is continuous and interpretable.

This balance is crucial for generative applications. Too much emphasis on reconstruction, and the model memorizes; too much regularization, and outputs become blurry and generic. The sweet spot produces latent spaces where nearby points decode to semantically similar outputs—essential for smooth video generation and realistic face manipulation.

VAEs in the Synthetic Media Pipeline

Understanding VAEs illuminates how modern AI video systems actually work. Latent Diffusion Models (LDMs), which power Stable Diffusion and its video variants, use VAEs as their compression backbone:

1. Encoding: Input images or video frames are compressed by a VAE encoder into latent representations, reducing computational requirements by orders of magnitude.

2. Diffusion: The diffusion process operates entirely in this compressed latent space, adding and removing noise to generate new content.

3. Decoding: The VAE decoder transforms latent representations back into pixel space, producing the final output.

This architecture explains why VAE quality directly impacts final output quality. A better VAE encoder preserves more semantic information; a better decoder produces sharper, more detailed reconstructions.

Implications for Deepfakes and Detection

VAE latent spaces have profound implications for both creating and detecting synthetic media. Face-swapping systems often operate by encoding faces into shared latent spaces, manipulating identity-related dimensions while preserving expression and pose, then decoding the result.

For detection, VAE reconstruction patterns can reveal synthetic origins. Real images may reconstruct differently than AI-generated ones when passed through trained VAE architectures. Researchers are actively exploring reconstruction-based anomaly detection as a deepfake identification strategy.

Beyond Images: Video and Audio Applications

Video generation extends VAE principles temporally. Models like VideoGPT and more recent commercial systems encode video sequences into spatiotemporal latent representations, enabling generation of coherent motion across frames.

Voice cloning similarly leverages VAE-style architectures to separate speaker identity from linguistic content, enabling the voice-swapping capabilities that make audio deepfakes increasingly convincing.

The Road Ahead

Recent research pushes VAE capabilities further. Hierarchical VAEs stack multiple levels of latent abstraction. VQ-VAEs (Vector Quantized) discretize latent spaces for better codebook learning. Diffusion-augmented VAEs combine the strengths of both generative paradigms.

For anyone working with or analyzing synthetic media, understanding VAEs isn't optional—it's foundational knowledge. These mathematical structures define what's possible in AI video generation, what artifacts synthetic content produces, and ultimately, how we might distinguish real from generated in an increasingly synthetic media landscape.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.