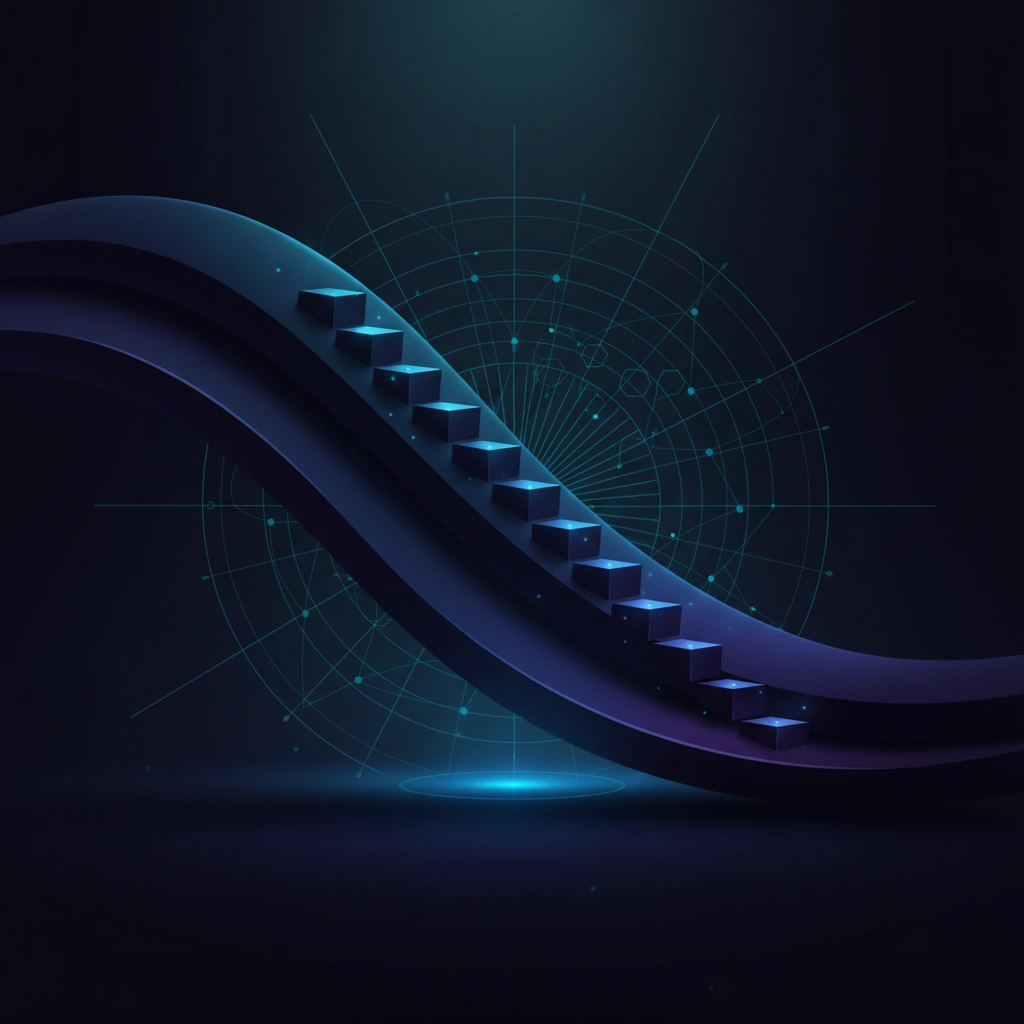

Machine Learning

Gradient Descent Explained: The Core Algorithm Behind AI

Understanding gradient descent is essential to grasping how neural networks learn. This foundational optimization algorithm powers everything from deepfake generators to detection systems.