Sphere Neural Networks: Guaranteed Decision Regions for AI

New neural architecture creates mathematically guaranteed decision regions using hyperspheres, enabling AI systems to know when they're uncertain rather than making unreliable predictions.

What if a neural network could tell you exactly when it was confident and when it wasn't—with mathematical certainty? A new research paper introduces Sphere Neural Networks (SNNs), an architecture that creates guaranteed decision regions in feature space, fundamentally changing how AI systems handle uncertainty.

The Problem With Traditional Neural Network Decisions

Standard neural networks make decisions by learning hyperplanes that divide feature space into regions. When new data arrives, the network assigns it to whichever region it falls into—but there's a critical problem. Traditional networks will confidently classify any input, even when that input lies far from anything seen during training.

This leads to a well-documented issue: neural networks can be confidently wrong. They assign high probability scores to completely unfamiliar inputs, creating dangerous situations in safety-critical applications like medical diagnosis, autonomous vehicles, and—crucially for our domain—deepfake detection systems where false confidence can be catastrophic.

Enter Sphere Neural Networks

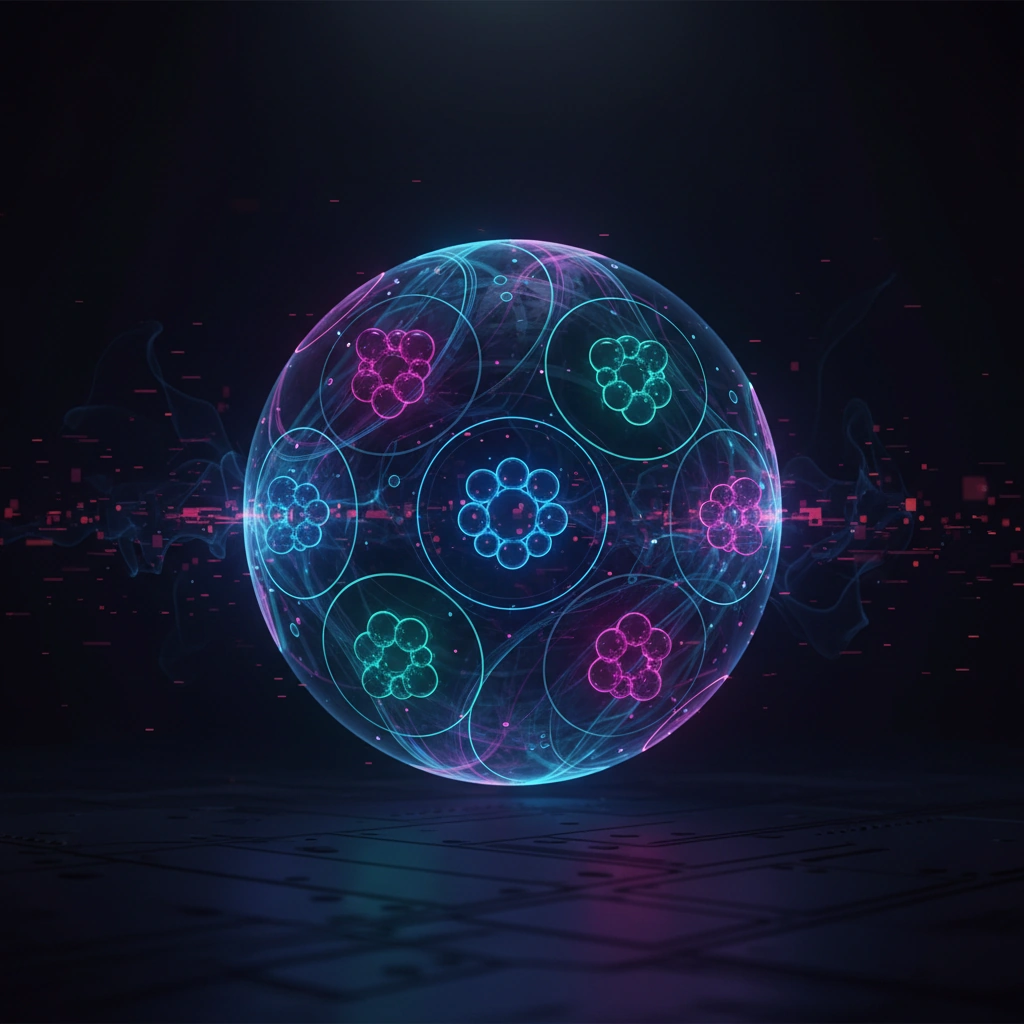

The researchers propose replacing traditional decision boundaries with hyperspheres—high-dimensional spherical regions in the network's learned feature space. Each class gets its own sphere, and classification happens only when an input falls definitively within a sphere's boundary.

The key innovation is the mathematical guarantee: if an input doesn't fall within any defined sphere, the network explicitly refuses to classify it. This isn't a soft "low confidence" score—it's a hard geometric constraint that makes unreliable predictions impossible by design.

How the Architecture Works

SNNs operate through a two-stage process. First, a feature extraction backbone (which can be any standard architecture like a CNN or transformer) maps inputs into a learned embedding space. Second, the sphere layer defines class-specific hyperspheres in this embedding space using learned center points and radii.

During training, the network learns to place same-class examples inside their corresponding spheres while keeping different classes in separate spherical regions. The optimization objective balances two goals: maximizing coverage (getting training examples inside their spheres) and maintaining separation (keeping spheres from overlapping).

The mathematical formulation uses distance metrics from each point to sphere centers, comparing these distances against learned radii. Points inside a sphere satisfy a simple inequality: their distance to the center is less than the radius.

Implications for Detection Systems

For deepfake detection and content authenticity verification, this architecture addresses a persistent challenge. Current detection systems often fail on novel manipulation techniques because they were trained on specific known methods. When encountering a new deepfake approach, traditional classifiers confidently label it as "real" or "fake" without acknowledging uncertainty.

Sphere Neural Networks could enable detection systems that explicitly flag unfamiliar content. Rather than forcing a binary real/fake decision, an SNN-based detector could return three outcomes: definitely real, definitely manipulated, or "unknown—this doesn't match patterns I was trained on."

This uncertainty-aware approach aligns with content authentication workflows where flagging uncertain cases for human review is far preferable to confident misclassification.

Technical Advantages and Trade-offs

The sphere-based approach offers several concrete benefits beyond uncertainty handling:

Interpretability: Spherical decision regions are geometrically intuitive. You can visualize where classes exist in feature space and understand why specific inputs receive their classifications or uncertainty flags.

Robustness to adversarial examples: Traditional classifiers can be fooled by small perturbations that push inputs across hyperplane boundaries. Spherical boundaries may prove more resistant since adversarial perturbations would need to move points into sphere interiors rather than just across flat boundaries.

Open-set recognition: SNNs naturally handle the open-set problem where test data includes classes not seen during training. Unknown classes simply fall outside all learned spheres.

However, trade-offs exist. The sphere constraint is geometrically restrictive—real class distributions may not be spherical in learned feature space. The researchers address this through multiple spheres per class and careful feature learning, but achieving high coverage while maintaining guarantees requires architectural tuning.

Broader Context: Reliable AI Decision-Making

This research connects to a growing movement in machine learning toward selective prediction and reliable uncertainty quantification. As AI systems are deployed in high-stakes domains, the ability to abstain from predictions becomes as important as prediction accuracy.

For synthetic media detection specifically, the adversarial nature of the problem—where creators actively try to evade detection—makes reliability guarantees particularly valuable. A system that can definitively say "I don't know" when facing novel manipulation techniques provides operational value that confident-but-wrong systems cannot.

The sphere neural network approach represents one architectural solution to this challenge, using geometric constraints to enforce the reliability properties that traditional soft confidence scores cannot guarantee.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.