MindCraft: Mapping How AI Models Build Visual Concepts

New framework reveals how AI models internally structure concepts through hierarchical trees, offering insights into how deepfakes and synthetic media are generated at the neural level.

Researchers have introduced MindCraft, a groundbreaking framework that reveals how large AI models internally organize and stabilize concepts—a crucial advancement for understanding how synthetic media generators like deepfake models actually work under the hood.

The research addresses a fundamental mystery in AI: while foundation models demonstrate remarkable capabilities across language, vision, and reasoning tasks, the mechanisms by which they structure knowledge internally have remained opaque. This opacity is particularly problematic for synthetic media generation, where understanding concept formation could lead to better detection methods and more controllable generation.

Concept Trees: The Hidden Architecture

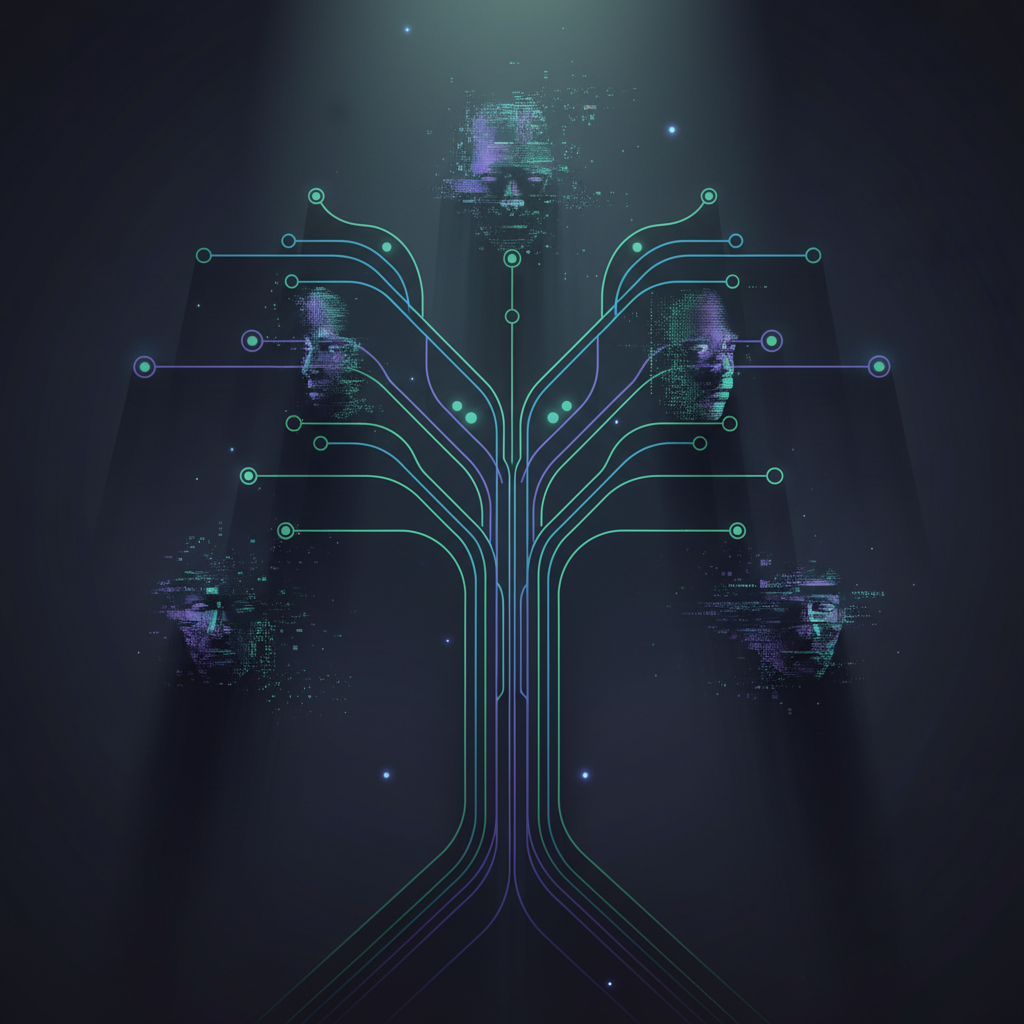

MindCraft introduces "Concept Trees," a novel approach inspired by causal inference that maps how concepts emerge and diverge within neural networks. By applying spectral decomposition at each layer and linking principal directions into branching paths, the framework reconstructs the hierarchical emergence of concepts.

The key insight is that concepts don't exist as monolithic entities within models but rather emerge progressively through layers, branching from shared representations into distinct, linearly separable subspaces. This branching structure reveals exactly when and how a model differentiates between, for example, a real face and a synthetic one, or how it builds up complex visual scenes from simpler components.

Implications for Synthetic Media

For the deepfake and AI video community, MindCraft's findings have profound implications. Understanding concept hierarchies could enable:

Better Detection Systems: By mapping how authentic versus synthetic concepts diverge in model representations, researchers could develop more robust deepfake detectors that target these specific divergence points rather than surface-level artifacts.

Controlled Generation: Video synthesis models could leverage concept trees to offer more precise control over generated content. Instead of treating generation as a black box, creators could potentially manipulate specific branches of the concept tree to achieve desired outcomes while maintaining realism.

Quality Assessment: The framework could provide new metrics for evaluating synthetic media quality by examining how well generated content aligns with the concept hierarchies learned from real data.

Cross-Domain Validation

The researchers validated MindCraft across diverse scenarios including medical diagnosis, physics reasoning, and political decision-making. This cross-domain success suggests the framework captures fundamental principles of how neural networks organize information—principles that likely govern how diffusion models, GANs, and other generative architectures create synthetic media.

The ability to recover semantic hierarchies and disentangle latent concepts means researchers can now peer into the "thought process" of models as they generate images or videos. For instance, when a model creates a deepfake video, MindCraft could potentially reveal how it constructs facial features, expressions, and movements from its learned concept hierarchy.

Foundation for Interpretable AI

Perhaps most significantly, MindCraft establishes a foundation for interpretable AI in the context of media generation. As synthetic media becomes increasingly sophisticated and harder to detect with traditional methods, understanding the conceptual machinery behind generation becomes critical.

The framework's ability to reveal when concepts diverge from shared representations could be particularly valuable for understanding adversarial examples and manipulation techniques. If we can see how a model's concept of "authenticity" forms and branches, we might better understand how to preserve digital trust in an era of synthetic media.

This research marks a significant step toward demystifying the black box of neural networks, offering a window into the conceptual structures that underlie both the creation and detection of synthetic media. As the field advances toward more powerful and convincing AI-generated content, tools like MindCraft become essential for maintaining the balance between creative capability and digital authenticity.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.