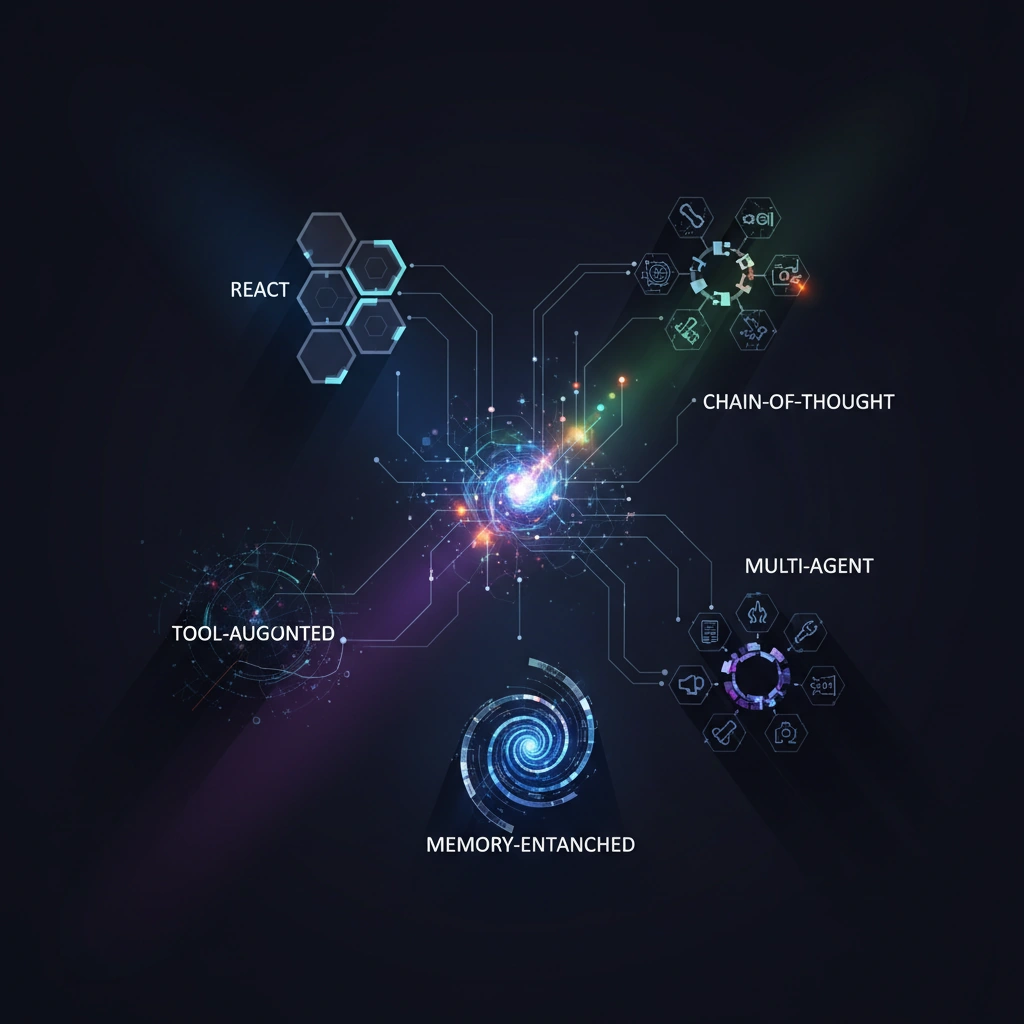

Essential AI Agent Design Patterns for Developers in 2025

Technical deep dive into AI agent architectures: ReAct, Chain-of-Thought, Tool-Augmented, Multi-Agent, and Memory-Enhanced patterns. Includes implementation details and real-world examples for building autonomous AI systems.

As AI agents evolve from simple chatbots to autonomous systems capable of complex reasoning and multi-step tasks, understanding core design patterns has become essential for developers. These architectural patterns define how agents perceive, reason, and act—forming the foundation of modern agentic AI systems.

The ReAct Pattern: Reasoning and Acting in Synergy

The ReAct (Reasoning and Acting) pattern represents a fundamental shift in how AI agents operate. Rather than generating responses in a single pass, ReAct agents alternate between reasoning about a problem and taking actions to gather information or accomplish tasks.

This pattern works through a cycle: the agent observes its environment, reasons about what action to take next, executes that action, observes the result, and continues this loop until reaching a goal. For example, when asked to find the current weather in a specific city, a ReAct agent first reasons that it needs weather data, then decides to use a weather API tool, executes the API call, observes the returned data, and finally formulates a response.

The technical implementation typically involves prompt engineering that explicitly separates "Thought," "Action," and "Observation" phases. This structured approach significantly improves reliability compared to single-step generation, particularly for tasks requiring multiple information-gathering steps or external tool usage.

Chain-of-Thought: Breaking Down Complex Reasoning

The Chain-of-Thought (CoT) pattern addresses the challenge of complex multi-step reasoning. By prompting language models to show their work—generating intermediate reasoning steps before arriving at a final answer—CoT dramatically improves performance on mathematical, logical, and analytical tasks.

Recent advances include self-consistency CoT, where agents generate multiple reasoning paths and select the most common answer, and zero-shot CoT, which achieves chain-of-thought reasoning simply by adding "Let's think step by step" to prompts. These techniques have proven particularly effective in domains like code generation, mathematical problem-solving, and strategic planning.

The pattern's strength lies in its transparency: developers can inspect the reasoning process, identify where agents go wrong, and refine prompts accordingly. This interpretability is crucial for deploying agents in production environments where understanding decision-making processes matters.

Tool-Augmented Agents: Extending Capabilities Beyond Language

Tool-augmented agents break free from the limitations of pure language models by integrating external tools and APIs. This pattern transforms agents from text generators into systems that can execute code, query databases, call APIs, and interact with real-world services.

The architecture typically involves three components: a tool registry that describes available tools and their parameters, a planning module that decides which tools to use, and an execution engine that safely runs tool calls and handles results. Modern frameworks like LangChain and AutoGPT have standardized tool integration patterns, making it easier to build agents that can browse the web, manipulate files, or control software applications.

Security becomes paramount in tool-augmented systems. Developers must implement sandboxing, input validation, and permission systems to prevent agents from executing harmful operations. The pattern often includes confirmation mechanisms for high-risk actions, balancing autonomy with safety.

Multi-Agent Systems: Collaborative Intelligence

The multi-agent pattern distributes complex tasks across specialized agents that collaborate toward shared goals. This mirrors human team structures: different agents have different expertise, communicate through structured protocols, and coordinate their actions.

Implementation strategies vary from simple sequential pipelines—where one agent's output becomes another's input—to sophisticated systems with dynamic task allocation and inter-agent negotiation. For instance, a content creation system might employ separate agents for research, writing, fact-checking, and editing, each optimized for its specific role.

The technical challenge lies in coordination: agents need shared context, consistent communication protocols, and conflict resolution mechanisms. Recent frameworks implement message-passing architectures, shared memory systems, and coordination agents that orchestrate task distribution and synchronization.

Memory-Enhanced Agents: Learning from Experience

Memory-enhanced agents maintain persistent state across interactions, enabling them to learn from experience and maintain context over extended periods. This pattern addresses the stateless nature of language models, which otherwise treat each interaction independently.

Memory systems typically operate at multiple timescales: short-term memory for immediate context (conversation history), working memory for active task state (current goals and intermediate results), and long-term memory for accumulated knowledge (past experiences and learned facts). Vector databases have become the standard for implementing semantic memory, allowing agents to retrieve relevant past experiences based on similarity rather than exact matching.

Advanced implementations incorporate memory summarization to prevent context overflow, importance weighting to prioritize critical information, and episodic memory structures that capture not just facts but entire interaction sequences with their outcomes.

Practical Implementation Considerations

Building production-ready agents requires combining these patterns thoughtfully. A robust agent architecture might integrate ReAct for action planning, CoT for complex reasoning, tool augmentation for capability extension, and memory enhancement for continuity—all while implementing proper error handling, timeout mechanisms, and cost controls.

Performance optimization matters: each reasoning step consumes API calls and incurs latency. Developers must balance reasoning depth against response time and cost, often implementing caching strategies and limiting maximum iteration counts.

As AI agents become increasingly capable and autonomous, these design patterns provide the architectural foundation for building reliable, maintainable, and effective systems. Understanding and applying them appropriately separates functional prototypes from production-ready agent applications.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.