Benchmarking Uncertainty Metrics in LLM-Based Assessment Systems

New research introduces a comprehensive benchmark for evaluating how well LLMs can quantify their own uncertainty when grading, with implications for AI reliability and trustworthy automated systems.

A new research paper tackles one of the most pressing questions in AI deployment: how confident should we be in the outputs of large language models? The study, titled "How Uncertain Is the Grade? A Benchmark of Uncertainty Metrics for LLM-Based Automatic Assessment," introduces a rigorous framework for evaluating how well LLMs can quantify their own uncertainty when performing automated grading tasks.

The Uncertainty Problem in AI Systems

As large language models increasingly handle tasks that traditionally required human judgment—from content moderation to educational assessment—understanding when these systems are confident versus uncertain becomes critical. An LLM that grades student essays or evaluates content authenticity needs more than just accuracy; it needs to know when it doesn't know.

This research addresses a fundamental gap in the field: while numerous uncertainty quantification methods exist for LLMs, there has been no standardized benchmark to compare their effectiveness in the specific context of automated assessment. The implications extend far beyond education, touching any domain where AI systems must make evaluative judgments with appropriate confidence calibration.

Technical Framework and Methodology

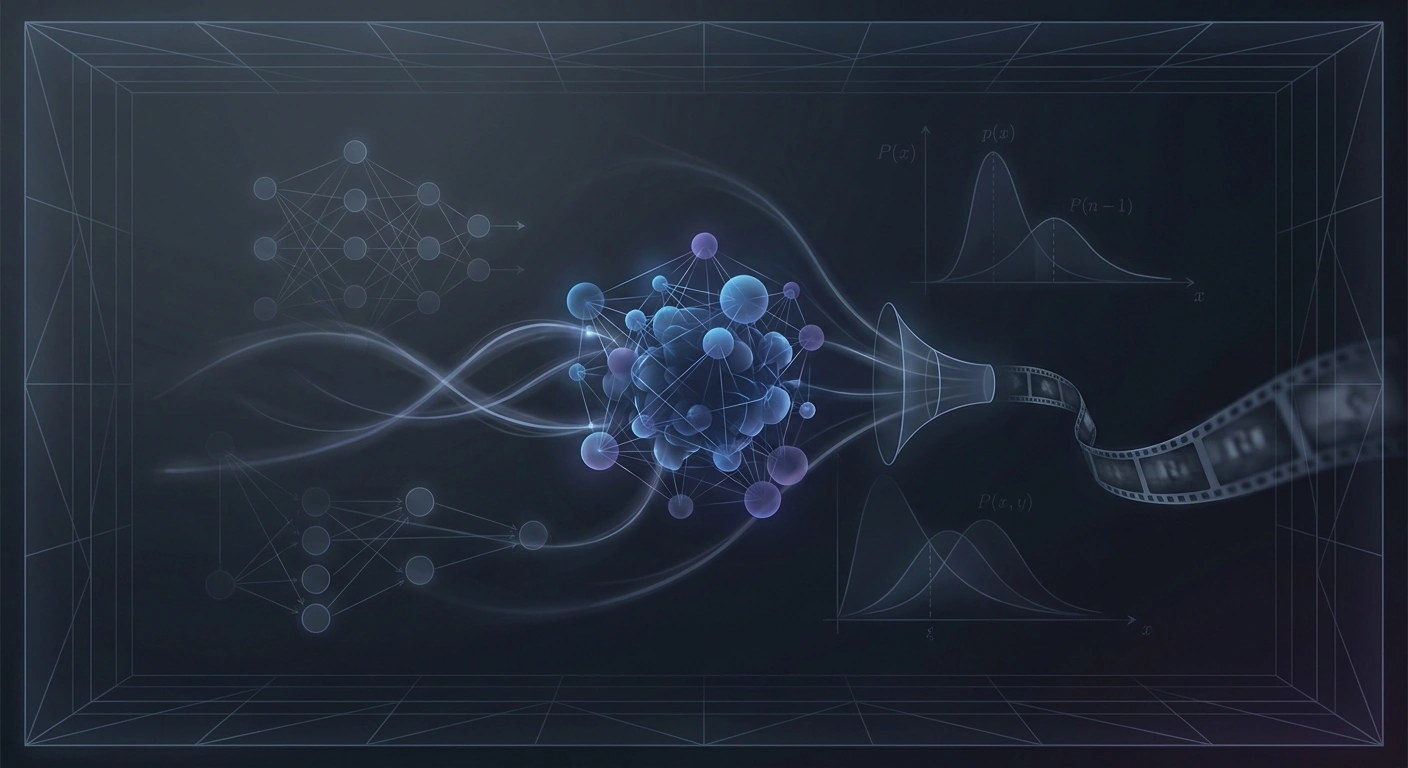

The benchmark evaluates multiple uncertainty metrics across several dimensions. The researchers examine both aleatoric uncertainty (inherent randomness in the data) and epistemic uncertainty (uncertainty due to limited knowledge), providing a comprehensive view of where LLM-based assessors struggle.

Key technical contributions include:

Token-level probability analysis: The study examines how probability distributions over generated tokens correlate with assessment accuracy. Models that spread probability mass across multiple possible grades exhibit different reliability patterns than those producing sharp distributions.

Semantic consistency metrics: By sampling multiple responses from the same model, researchers measure how consistent the semantic content of assessments remains. High variance in reasoning paths while maintaining consistent final grades suggests different calibration characteristics than models that vary in both.

Calibration curves and expected calibration error (ECE): The benchmark includes rigorous evaluation of whether stated confidence levels match empirical accuracy rates. A model claiming 90% confidence should be correct roughly 90% of the time—deviation from this ideal reveals miscalibration.

Implications for AI Authenticity and Trust

While this research focuses on educational assessment, its findings have direct relevance to AI systems evaluating content authenticity. Deepfake detection systems, content verification tools, and synthetic media classifiers all face the same fundamental challenge: knowing when to flag uncertainty rather than making overconfident predictions.

Consider a deepfake detection system analyzing a video. A well-calibrated system should recognize when a video falls into an ambiguous zone—perhaps using techniques the detector wasn't trained on—rather than confidently declaring it authentic or synthetic. The uncertainty quantification methods benchmarked in this research could help build more trustworthy content authenticity systems.

Practical Deployment Considerations

The research highlights several practical factors affecting uncertainty metric selection:

Computational overhead: Some metrics require multiple forward passes through the model, significantly increasing inference costs. The benchmark evaluates this trade-off, helping practitioners choose methods appropriate for their latency and compute constraints.

Model architecture dependencies: Different uncertainty metrics work better with different model families. The findings suggest that no single metric dominates across all architectures, emphasizing the need for benchmark-driven selection.

Task sensitivity: Uncertainty characteristics vary with assessment difficulty. The benchmark includes tasks of varying complexity to reveal how metrics perform across the difficulty spectrum.

Broader Context in AI Reliability Research

This work connects to a growing body of research on making AI systems more reliable and trustworthy. As LLMs are deployed in higher-stakes applications—from content moderation to medical diagnosis to legal document review—understanding their uncertainty becomes essential for responsible deployment.

The benchmark also has implications for human-AI collaboration. Systems that accurately communicate uncertainty allow human reviewers to focus attention where it's most needed. In content authenticity applications, this could mean flagging borderline cases for human expert review while confidently handling clear-cut examples.

Looking Forward

The researchers note several areas for future work, including extending the benchmark to multimodal assessment scenarios. As AI systems increasingly evaluate video, audio, and mixed-media content, uncertainty quantification for multimodal inputs becomes crucial—directly relevant to synthetic media detection and digital authenticity verification.

This research provides a foundation for building AI systems that don't just make judgments, but understand the limits of their own knowledge. For anyone deploying LLMs in evaluative roles—whether grading essays, detecting synthetic content, or verifying digital authenticity—the findings offer practical guidance for selecting and implementing uncertainty metrics that enhance rather than undermine trust in AI systems.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.