AI-Generated Fake Vehicle Damage Photos Fuel Insurance Fraud

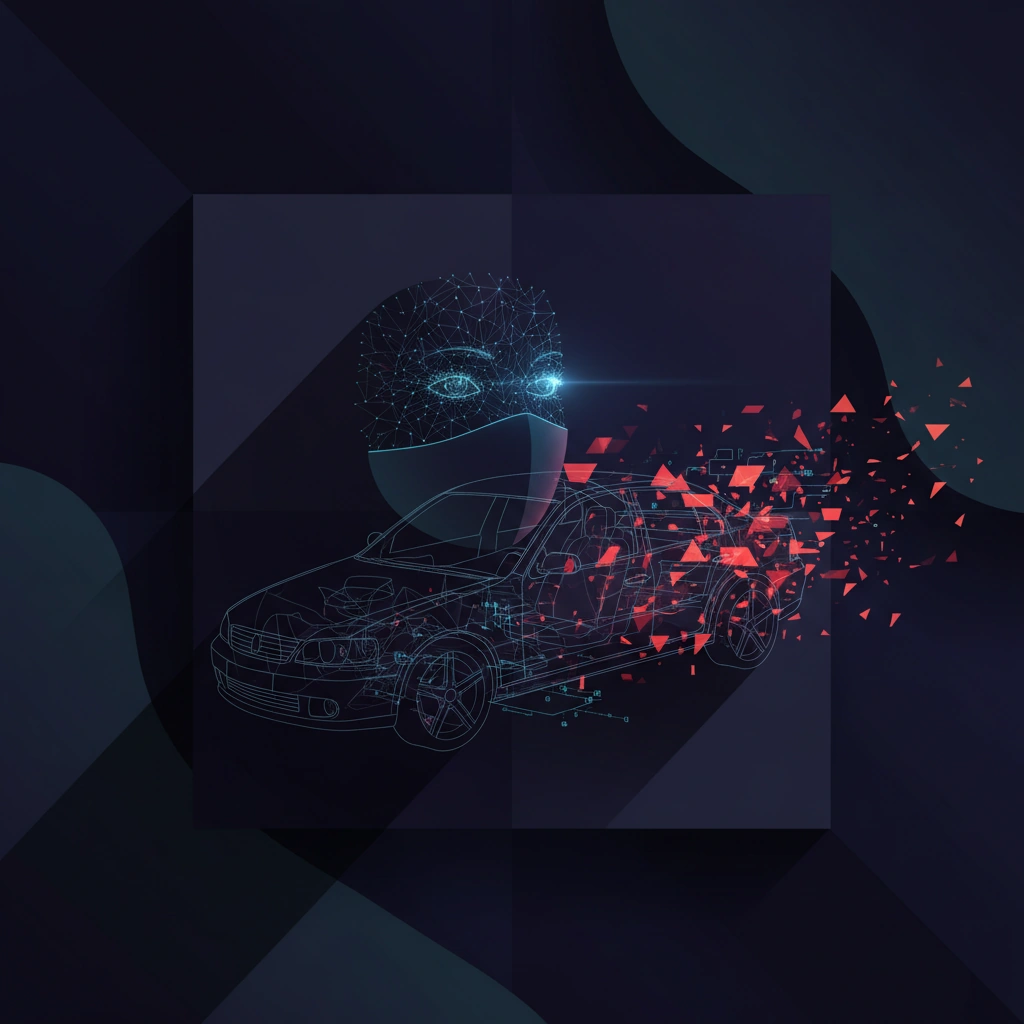

New research reveals how generative AI is being weaponized to create synthetic vehicle damage images, enabling a surge in fraudulent insurance claims.

A new wave of insurance fraud is emerging, powered by generative AI tools that can create convincingly realistic images of vehicle damage that never actually occurred. Research published on arXiv exposes how synthetic media technologies—originally developed for creative and commercial applications—are being systematically exploited to defraud insurance companies.

The research highlights a troubling evolution in fraud techniques. Where traditional insurance scams required actual staged accidents or physical damage to vehicles, fraudsters can now generate photorealistic images of dents, scratches, and collision damage using AI image generation models. These synthetic images can be submitted as evidence for fraudulent claims without ever damaging a vehicle.

The Technical Challenge of Detection

What makes this threat particularly concerning is the sophistication of modern generative AI models. Contemporary image synthesis tools have largely overcome the telltale artifacts that once made fake images easy to spot—unnatural lighting, anatomical impossibilities, or texture inconsistencies. Vehicle damage presents an especially favorable use case for AI generation because the subject matter is inherently chaotic and varied, making synthetic outputs harder to distinguish from authentic damage photography.

The research examines the capabilities of current generative AI models to create vehicle damage imagery and analyzes the technical characteristics that might enable detection. This includes studying metadata inconsistencies, compression artifacts, and subtle patterns in how AI models render reflective surfaces, shadows, and material deformation—all critical elements in damage documentation.

Implications for Digital Authenticity

This development represents a significant escalation in the synthetic media authentication challenge. Unlike deepfake videos of public figures or celebrity face swaps, these fraud applications involve mundane, everyday imagery where visual anomalies are harder to spot and the stakes are purely financial rather than reputational.

Insurance companies are now facing an arms race: as AI generation capabilities improve, detection systems must evolve in parallel. The research suggests that traditional fraud detection methods—which rely on claim patterns, historical data, and human expertise—are insufficient against AI-generated evidence. New technical approaches are needed that can identify synthetic imagery at scale.

Detection Technologies and Countermeasures

The paper explores several technical approaches to identifying AI-generated vehicle damage photos. These include forensic analysis of image generation signatures, neural network-based classifiers trained to spot synthetic content, and blockchain-based provenance systems that could verify images were captured by authenticated devices at specific times and locations.

Content authenticity standards like C2PA (Coalition for Content Provenance and Authenticity) could play a crucial role in combating this fraud. By embedding cryptographically signed metadata into images at the moment of capture, insurance companies could verify that damage photos came from genuine smartphone cameras rather than AI generation tools. However, widespread adoption of such standards remains years away.

The Broader Synthetic Media Threat Landscape

Vehicle insurance fraud is just one application of a much larger problem. As generative AI tools become more accessible and powerful, they enable synthetic media fraud across numerous domains—from real estate documentation to product condition verification in e-commerce. Any industry that relies on visual evidence for decision-making faces similar vulnerabilities.

The research underscores that synthetic media detection cannot be an afterthought. As AI generation capabilities continue to advance, detection systems must be developed in parallel, and authentication standards must be built into the infrastructure of digital imaging from the camera sensor onward.

This emerging fraud vector demonstrates that deepfakes and synthetic media aren't just problems for social media platforms and news organizations—they're creating tangible financial threats across industries that have relied on photographic evidence for decades. The insurance sector's response to this challenge may set important precedents for how other industries address the authentication crisis created by generative AI.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.