AgentScope: Building Scalable Multi-Agent LLM Systems

AgentScope provides a flexible framework for orchestrating multiple LLM agents with built-in communication protocols, fault tolerance, and scalability features for complex AI workflows.

As large language models become foundational infrastructure for AI applications, the challenge has shifted from single-model deployment to orchestrating multiple specialized agents working in concert. AgentScope, an open-source framework developed by researchers at Alibaba, addresses this complexity head-on with a comprehensive toolkit for building, deploying, and managing multi-agent systems at scale.

The Multi-Agent Paradigm Shift

Traditional LLM applications typically involve a single model handling all tasks—from understanding user intent to generating responses. This monolithic approach quickly hits limitations when applications require diverse capabilities: reasoning, tool use, memory management, and domain-specific expertise. Multi-agent architectures decompose these responsibilities across specialized agents that communicate, collaborate, and coordinate to accomplish complex objectives.

AgentScope embraces this paradigm with a modular design philosophy. Rather than prescribing rigid interaction patterns, the framework provides flexible primitives that developers can compose into arbitrary agent topologies. This matters significantly for synthetic media pipelines, where different stages—content planning, asset generation, quality verification, and output synthesis—often benefit from specialized processing.

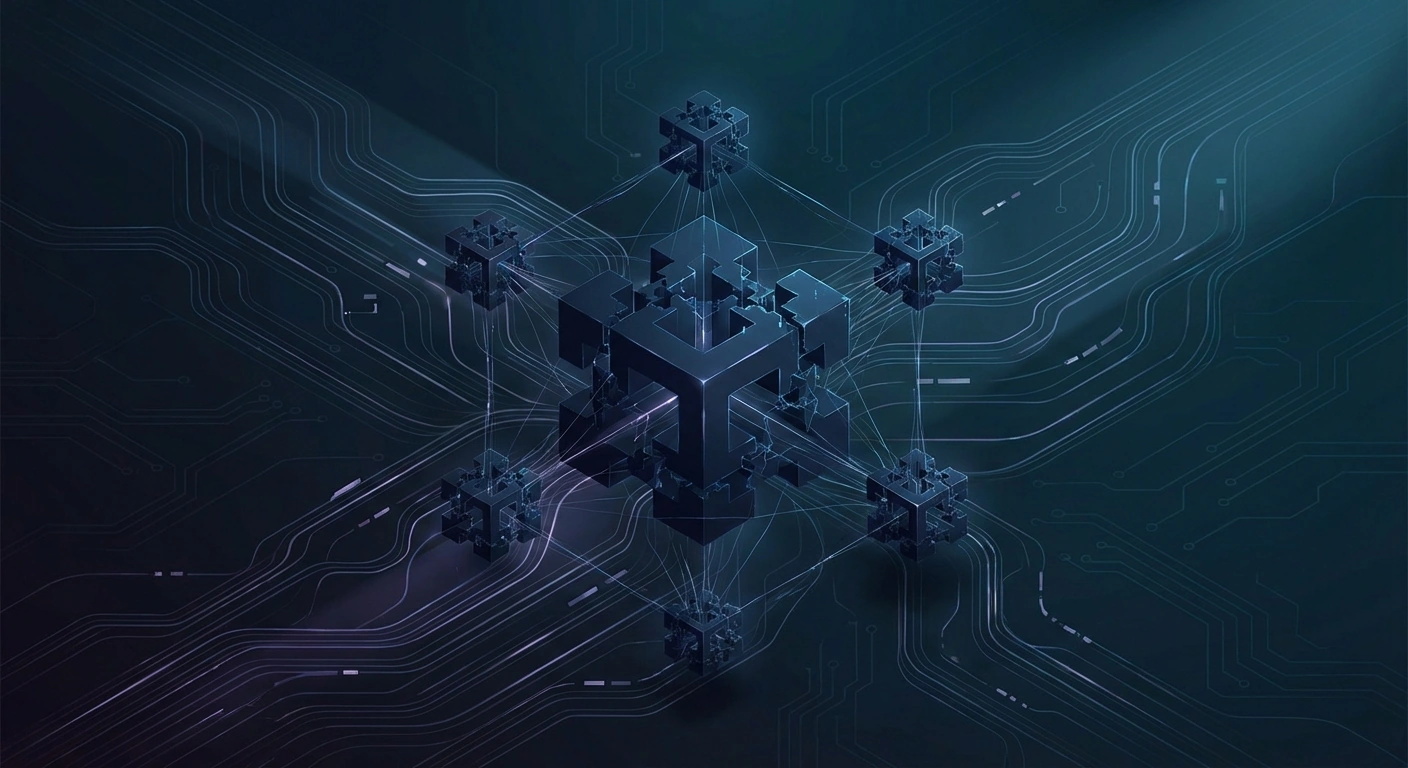

Core Architecture Components

At the foundation of AgentScope lies its agent abstraction layer. Each agent encapsulates an LLM backend (supporting OpenAI, Anthropic, local models, and others), a system prompt defining its role, memory modules for context retention, and optional tool integrations. This standardized interface allows agents to be swapped, upgraded, or specialized without disrupting the broader system architecture.

The message passing protocol enables inter-agent communication through structured message objects containing content, metadata, and routing information. AgentScope supports both synchronous request-response patterns and asynchronous event-driven communication, accommodating diverse workflow requirements from real-time chat applications to batch processing pipelines.

Perhaps most critically for production deployments, AgentScope includes robust fault tolerance mechanisms. Agent failures—whether from API rate limits, model errors, or infrastructure issues—are handled gracefully through configurable retry policies, fallback agents, and circuit breakers that prevent cascade failures across the system.

Orchestration and Workflow Management

AgentScope provides several built-in orchestration patterns while remaining extensible for custom workflows:

Sequential pipelines pass information through a chain of agents, each transforming or enriching the output before passing it forward. This pattern suits content generation workflows where planning, drafting, editing, and formatting occur as distinct stages.

Parallel fan-out/fan-in distributes tasks across multiple agents simultaneously, then aggregates results. For synthetic media applications, this enables generating multiple asset variations concurrently before selecting optimal outputs.

Hierarchical supervision introduces manager agents that delegate subtasks to worker agents, monitor progress, and handle exceptions. This proves valuable for complex generation tasks requiring coordination across modalities—text, image, audio, and video components assembled into cohesive outputs.

The framework's workflow engine supports both programmatic definition through Python code and declarative specification via configuration files, lowering the barrier for teams with varying technical backgrounds.

Memory and State Management

Effective multi-agent systems require sophisticated memory management. AgentScope implements a tiered memory architecture with working memory for immediate context, episodic memory for conversation history, and semantic memory for long-term knowledge retrieval.

Each memory tier supports configurable retention policies, compression strategies, and retrieval mechanisms. For long-running synthetic media projects spanning multiple sessions, this enables agents to maintain consistency in style, tone, and creative direction across extended timeframes.

The framework also provides shared memory spaces that multiple agents can read from and write to, facilitating collaboration without explicit message passing. A content planning agent might populate a shared workspace with asset specifications that generation agents consume independently.

Tool Integration and External Systems

AgentScope's tool framework standardizes how agents interact with external capabilities. Tools are defined as callable functions with typed parameters and return values, allowing the framework to generate appropriate prompts and parse model outputs automatically.

Built-in tool categories include web search, code execution, file operations, and API integrations. For synthetic media applications, teams can define custom tools wrapping generation APIs—image synthesis endpoints, voice cloning services, or video generation models—enabling agents to orchestrate complex multi-modal workflows through natural language instructions.

Scalability and Deployment

Production deployments demand scalability beyond single-machine limitations. AgentScope supports distributed execution through its actor-based runtime, allowing agents to run across multiple processes, machines, or cloud instances while maintaining communication through the message protocol.

The framework integrates with container orchestration platforms, providing health checks, graceful shutdown handling, and resource management suitable for Kubernetes deployments. Observability features including structured logging, distributed tracing, and metrics collection enable monitoring complex agent interactions in production environments.

Implications for Synthetic Media

Multi-agent architectures have particular relevance for synthetic media production pipelines. The complexity of generating consistent, high-quality synthetic content—whether deepfake detection systems, AI-generated video, or voice synthesis applications—often exceeds what single models handle effectively.

AgentScope's orchestration capabilities enable decomposing these workflows into specialized stages: content planning agents defining creative direction, generation agents producing assets, quality assurance agents detecting artifacts or inconsistencies, and assembly agents composing final outputs. Each stage can leverage purpose-built models and tools while the framework handles coordination overhead.

As synthetic media capabilities advance, the infrastructure supporting these systems becomes increasingly critical. Frameworks like AgentScope represent the maturation of multi-agent development from research curiosity to production-ready tooling.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.