The 7 Layers of Agentic AI: A Technical Framework

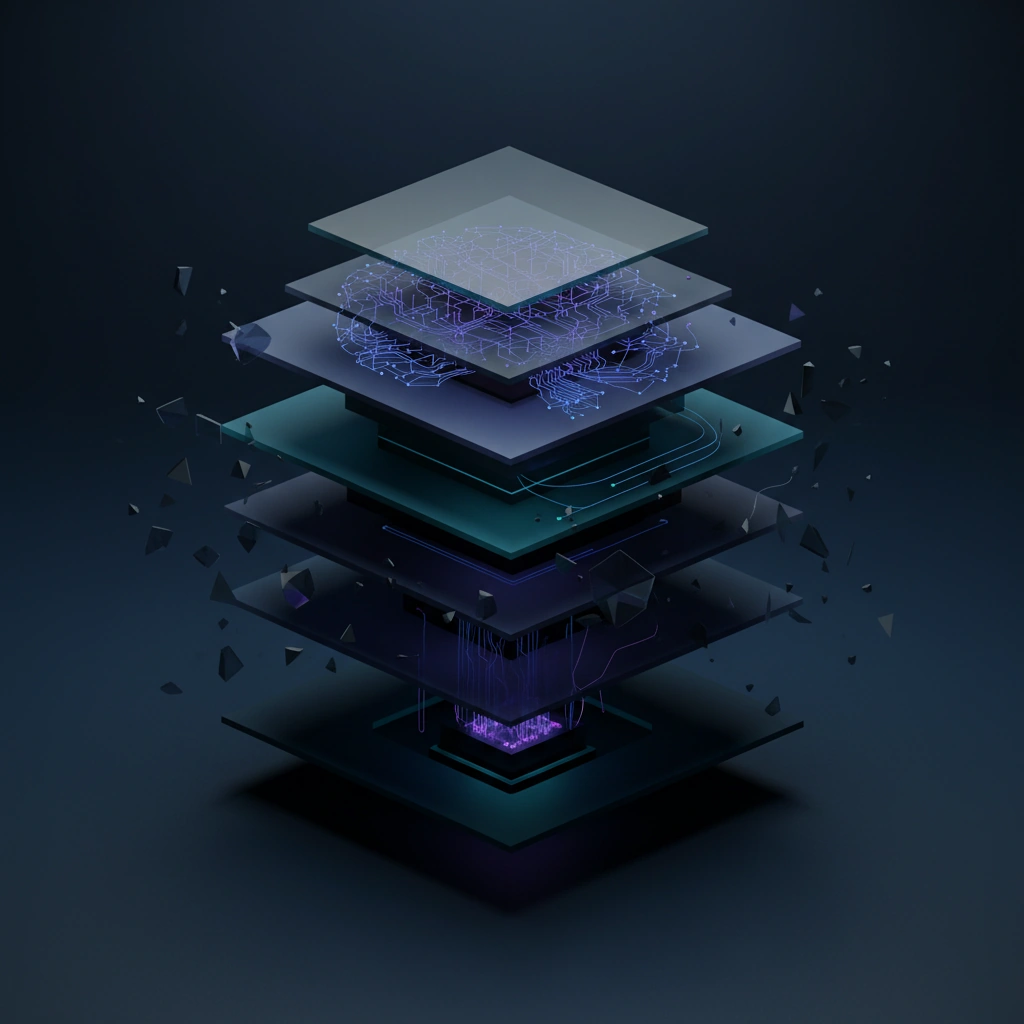

A comprehensive technical breakdown of agentic AI architecture into seven distinct layers, from memory systems to orchestration, providing clarity in an often overhyped field with practical implementation insights.

As agentic AI systems become increasingly sophisticated, understanding their underlying architecture has become critical for developers and researchers. A new framework breaks down these complex systems into seven distinct technical layers, providing much-needed clarity in a field often clouded by marketing hype.

Understanding Agentic AI Architecture

Agentic AI refers to systems capable of autonomous decision-making, goal pursuit, and adaptive behavior without constant human intervention. Unlike traditional AI models that simply respond to prompts, agentic systems maintain state, plan actions, and execute multi-step workflows. This architectural complexity demands a systematic approach to understanding how these systems function.

The seven-layer framework organizes agentic AI capabilities from foundational memory systems through high-level orchestration, mirroring how computer networks are understood through OSI model layers. Each layer builds upon the previous, creating increasingly sophisticated autonomous capabilities.

Layer 1-3: Foundation and Memory

The base layers focus on memory management and knowledge retention. Layer 1 encompasses episodic memory systems that store interaction histories, while Layer 2 handles semantic memory for factual knowledge. Layer 3 introduces working memory mechanisms that maintain context across multi-turn interactions.

These memory architectures are crucial for applications in synthetic media generation, where AI systems must maintain consistency across generated frames, remember stylistic choices, and build coherent narratives. Vector databases, retrieval-augmented generation (RAG), and attention mechanisms form the technical backbone of these layers.

Layer 4-5: Reasoning and Planning

The middle layers implement reasoning capabilities and strategic planning. Layer 4 introduces chain-of-thought reasoning, allowing agents to decompose complex problems into manageable steps. This includes techniques like ReAct (Reasoning + Acting) patterns where agents interleave thought processes with actions.

Layer 5 advances to hierarchical planning with goal decomposition and task scheduling. Agents at this level can create multi-step plans, anticipate obstacles, and adapt strategies dynamically. For AI video generation systems, this translates to shot planning, scene composition, and narrative structure generation that extends beyond single-frame synthesis.

Layer 6: Tool Use and Integration

Layer 6 represents the action layer where agents interact with external systems through APIs, function calling, and tool use. This includes integration with specialized models for image generation, video synthesis, audio processing, and content verification systems.

The technical implementation involves function schemas, parameter extraction from natural language, error handling, and result interpretation. Modern frameworks like LangChain and AutoGPT operate heavily at this layer, enabling agents to orchestrate multiple specialized AI models—combining large language models with diffusion models for video generation or voice cloning systems for synthetic media creation.

Layer 7: Orchestration and Meta-Learning

The top layer handles system-level orchestration and self-improvement. This includes multi-agent coordination, workflow management, and meta-learning capabilities where agents learn from their own performance over time.

In the context of deepfake detection or digital authenticity verification, Layer 7 orchestration might coordinate multiple detection models, manage confidence thresholds, and adapt detection strategies based on emerging manipulation techniques. The system maintains oversight of lower layers, adjusting memory retention policies, reasoning strategies, and tool selection based on task performance.

Practical Implications for AI Developers

This layered framework provides several practical benefits. First, it enables modular development where teams can improve individual layers without rebuilding entire systems. A video generation agent might upgrade its planning layer (Layer 5) to better handle temporal coherence while maintaining existing memory and tool integration layers.

Second, the framework facilitates debugging and performance analysis. When an agent fails to maintain character consistency across a generated video sequence, developers can isolate whether the issue lies in memory retrieval (Layers 1-3), planning (Layers 4-5), or tool orchestration (Layer 6).

Third, it provides a roadmap for capability assessment. Not all applications require all seven layers. A simple content authenticity checker might only need Layers 1, 4, and 6, while a fully autonomous synthetic media production system would leverage the complete stack.

Beyond the Hype

By decomposing agentic AI into concrete technical layers, this framework cuts through industry hype to reveal actual architectural requirements. As AI video generation and synthetic media tools become more autonomous—handling everything from concept development to final rendering—understanding these layered capabilities becomes essential for both creators and those working on detection and verification systems.

The framework also highlights gaps in current implementations. Many systems marketed as "agentic" operate only at Layers 1-4, lacking true tool integration or meta-learning capabilities. This technical precision helps the industry move beyond buzzwords toward meaningful architectural discussions about autonomous AI systems.

Stay informed on AI video and digital authenticity. Follow Skrew AI News.